The Political Distillation of AI Sovereignty

Protectionism is breaking globalization, can AI sovereignty initiatives on autopilot encode and automate established corruption in a post-global world?

I have a vivid set of memories from 2020: Nations hoarding masks and oxygen tanks, empty shelves and overpriced goods whose prices never got back to what they once were, social distancing and TikTok songs. But perhaps the saddest part for those that endured all of this, was to see a world that failed to unite facing a common enemy, and even worse, it fractured instead. For those watching politics from within, the message was a daunting one: Sovereignty isn’t just national pride—it’s survival of the richer.

Five years later, we now have Donald Trump leading the U.S. once again, but this time with a cryptic agenda that strongly leans into anti-globalization, embodying a new read of protectionist idealism. Is it borderline absurd for a nation that unilaterally decided its national currency would be the global reserve currency—replacing both the gold standard and Keynes’ Bancor—to disregard the geopolitical implications of actions taken at home? Reasonable ask I dare say. Dropping both Bancor and the gold peg for the U.S. dollar certainly have made America rich and sponsored the classic American dream, but it also consolidated disproportionate power in Washington’s hands. While this system allowed the U.S. to prosper in the long term, any missteps or poorly considered geopolitical moves have a global impact far greater than they would have if the world had adopted something as Bancor instead, as its design was meant to provide a more balanced, cooperative economic framework that could mitigate the individual instabilities of specific nations. Sure feels as Trump’s America never heard Uncle Ben’s famous quote: “With great power comes great responsibility”. And following the fresh announcement of Trump tariffs, Steve Ballmer, surrounded by Bill Gates and Satya Nadella, said “disruption is very hard on people”. Is America doing what they need to do then? Is this disruption but disguised as chaos? Only time will tell, however, Trump’s decision to adopt an increasingly aggressive stance toward other nations could, in fact, bolster the legitimacy of alternative initiatives championed by others, such as a global open trading system or something in the likes of the BRICS+ aspirations (e.g., in moving away from the IMF, World Bank, and SWIFT in favor of more independent financial systems). And this is a sobering reality that often goes unspoken in mainstream discourse: Alternatives to the current global order do exist BUT, the nation wielding the most significant hard power in the global arena, may continue to lead primarily through threats, coercion, and all flavors of retaliation, holding potential reforms to be contingent upon its approval. Should this approval be withheld, consequences could be far-reaching. Bullish, no doubt, but true nonetheless.

But now that the widespread efforts to safeguard AI sovereignty are at our doorsteps, we are about to witness yet another wave of initiatives that will definitely rewrite transgenerational geopolitics. If we allow this to fuel yet another zero-sum race, it could end the world as we know it, driving mass automated exploitation with little regard for whether we could have done better, as a species, as people, and as the sole intelligent lifeform we know of.

The Next Step in the Long Betrayal of Democratic Tenets

We failed, and then our most idealistic frameworks also failed us. Monopolies and cartel-like behavior existed for centuries, and the big-tech era certainly proved that corporations can and do act like governing entities with a great deal of political clout and power over the economics of their hosting nations, to the point they are key decision-makers in shaping global economics. Sectors like oil and banking, notorious monopolies and empires, were shaped by the lack of action from policymakers over centuries, and much like them, modern monopolies also got cozy with lawmakers.

Monopolies were not merely the planned outcome of laissez-faire capitalism but were often supported and even nurtured by government themselves, creating “regulated monopolies” that sure, can sponsor new forms of “cartels” that dominate the modern Earth. Industries from railroads, oil and steel shaped the “old money” set of cartels and now, the cloud, chips and software industries forged their XXI century versions of institutions that further enable the stagnation of human evolution for different flavors of slavery, complacency and consumerism: you’re free, as long as you can pay all your monthly subscription plans—for every facet of your life, forever. Somehow, the normalization of these practices turned our lives on this planet into a pay-as-you-go game, and that’d be alright if state institutions were on our side, but it seems such perspective always was and always will be naive: “you don’t understand how the world works” is what folks will tell you right after branding you an idealist if you even mention the snowball effects of corruption, cartels and the indiscriminated instrumentation of money to support any and all types of immoral developments.

AI will eventually automate this social engine at scale. From feeding your data to systems, to generating business strategies that extract every dollar from consumers, if you think technology will offer salvation, it’s time to reconsider: it won’t. If you believe companies are already squeezing every penny from their operations today, imagine the super-efficient version of this process, applied across all sectors and industries, affecting every aspect of our lives—and none, I emphasize, none of it will favor you as a consumer. The exploitative dynamics that have prevailed since the early 2000s will be amplified by AI, enabling greed to rise above all else. And in the meantime, lawmakers are unlikely to act, as they have consistently avoided challenging the interests of their commercial sponsors—the entrenched powers and national champions that hold sway over policy.

Left on the autopilot of political and commercial cartels, AI won’t bring fairness, won’t solve macrosocial issues and very likely will further exploit the dynamics that once led me to revisit Wallerstein ideas as a new framework for the modern politics of AI, something I termed the AI Core-Periphery framework (ACP):

The dynamics and characteristics of the parts as explored in the ACP are a great illustration of how geopolitical interests are ingrained within modern AI deals and arrangements of power. The ACP makes clear that classic power projection is very much alive, and that the political institutions in place, instead of getting updated or adjusted to accommodate modern interests, further exacerbate a post-war thinking.

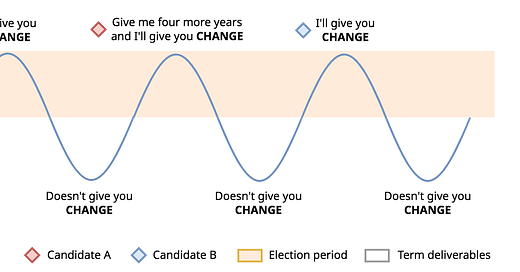

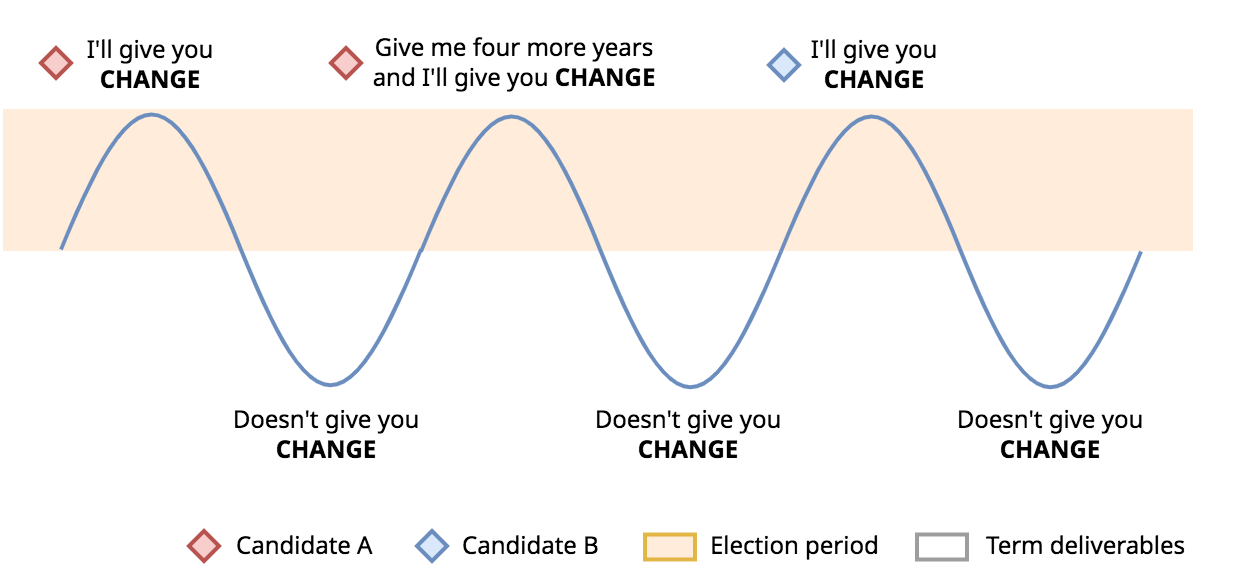

These arrangements erode democracies from within. Political rhetoric becomes little more than a reflection of what the electorate wants to hear, while politicians, across the globe, continue to trade their promises for votes in the next election cycle. As each term ends, they assure the public that in their second term, all will be delivered: promises fulfilled, and the nation restored to the mythical golden age they once had. This narrative, akin to a biblical prophecy, remains unfulfilled, yet the cycle repeats. The opposition candidate steps forward, claiming to possess the answers that the previous administration could not deliver, and so the ouroboros of political inertia continues. No matter how technology advances, enabling us to perform tasks more efficiently, citizens are still bound by the same stagnant macro-social dynamics: rising taxes, unrelenting workweeks, and the illusion of progress.

In this electoral quagmire, democratic institutions find themselves imprisoned by regulatory capture, gradually corroding from within, ending up either destroyed, reformed under duress, or, worse, overtaken by the learned helplessness of their own staff—disillusioned by inefficacy and path dependent politics, and our public services, along with the very health of our democracies, suffer as a result. And rather tragically, AI sovereignty initiatives—born with the intent to exploit these very dynamics—are then bound to fail their ultimate purpose, as they are fated to become just the latest scapegoat in this political scheme that is too entrenched in corruption, cartelism, and profit-driven motives to innovate. This system, by definition, is incapable of evolving, and thus it stifles growth and strengthens the very weaknesses it claims to address.

Statecraft and AI Sovereignty: A Look Under the Hood

By now, it is pretty evident that not just nations, but the major powers championing AI—informally the U.S. and China—aren’t merely building AI; they’re fortifying castles. Where we should be witnessing progress, we see Monopoly 4.0, first with oil, then weapons, tech, and now intelligence, with each era promissing change just to end up with locked in power instead, and it is these powers at be that fund the very base of a pyramid that defines AI power, as exposed in my AISRM framework: AI Supply Risk Management isn’t optional.

Of course, all the initiatives listed in the AISRM represent parallel efforts, as this is the nature of governamental processes. However, the stack clearly illustrates that at the foundation of potential geopolitical autonomy in AI, lies a critical infrastructure, enablers of true tech-sovereignty endeavors. In the EU, a range of initiatives is driving the creation of AI factories—digital ecosystems that provide computing power, data and talent to EU members, particularly geared towards generative AI models. Announced at the recent Paris AI Action Summit, a movement led primarily by France and India advocates for a “third way” in AI initiatives, seeking to establish a third pole of AI power aimed at counterbalancing the initiatives of the U.S. and China initiatives and offer a potential path to independence from the gravitational pull of these two global giants.

In the U.S., standing in a building that hosts the world’s second fastest supercomputer at an original Manhattan Project site, U.S. Secretary of Energy Chris Wright, alongside Greg Brockman, co-founder and president of OpenAI, declared: “We’re at the start of Manhattan Project Two. It is critical, just like Manhattan Project One, that the United States wins this race, […] we could lose this race in many ways if we don’t get energy right, we don’t unleash American energy”. And President Trump endorses these initiatives, facilitating the construction of data centers on federal lands, including storied nuclear research labs, in an effort to fast-track nuclear energy generation and bolster U.S. AI capabilities.

Unsurprisingly, the concept of sovereign tech stacks is closely linked to the defense industrial base and national security. What may come as a surprise, is that U.S. and China are collaborating on adopting Military AI practices to establish critical consensus in key domains, including the emerging AIxBio risks. The introduction of Mutual Assured AI Malfunction (MAIM) in March 2025—proposed by U.S. researchers as strategic concept— further underscores how the notion of sovereign AI is reshaping international relations. Analogous to the concept of nuclear Mutual Assured Destruction (MAD) in nuclear deterrence, MAIM suggests that attempts by one nation to dominate AI could provoke preventive sabotage by rivals, creating a balance of power through mutual vulnerability, a concept that mirrors U.S. cyber defense strategy, which is built around the doctrine of “defend forward” which is another way to say “I’ll hack you first, but I won’t do anything if you play ball”. And of course, for China, these doctrines present challenging dynamics, especially considering that while military and government favorite OS may be safer, the majority of personal computers in China run on Windows and most smartphones use Android, operational systems that are not only widely-used, but also often vulnerable to COTS hacks and undisclosed zero day flaws, which allow the U.S. to keep leveraging whatever its capabilities offer and covert doctrines demand.

The Era of Techo-economic Protagonism

While much of the discourse surrounding “sovereign AI” remains fixated on its technical or nationalistic dimensions, my analysis—shaped by the intricate interconnections between politics, geopolitics, and sovereignty—transcends this narrow framework to put into perspective a bifurcated vision of the global AI landscape. Following the paradigm of the ACP framework, one can make use of its triadic hierarchy to further refine these roles from a more politically coherent narrative. Core AI nations are then seen as Techno-economic Protagonists (TEPs), nations wield comprehensive mastery over the full technological stack—compute, data, talent, and standards. These leading nations either possess or have comprehensive access to the full technological stack necessary to enable their leadership, true architects of AI power on the global stage. In contrast, the remainder of the world falls into the other two ACP categories: semi-peripheral and peripheral countries, where semi-peripheral states engage with TEP powers through participation in their business ventures and initiatives, while peripheral nations are primarily consumers of AI services, products, and infrastructure provided by the former two. However, attaining leadership in this arena is no simple feat: TEP status hinges on an intricate web of dependencies, wherein sovereignty over AI presupposes dominion across interlocking “sovereignties”, managing dependencies and replacing them whenever possible, initiatives that involve economic, technological, and geopolitical strategies and capabilities.

This perspective necessitates a further distinction, one that disaggregates market-driven solutions and infrastructure—typically privately owned but with deep ties to state sponsorship—from those technological stacks that bolster political and geopolitical agency, which remain under the purview of state institutions at federal, state, and local levels. Through this lens, we can then see via the Pillars of a Techno-economic Supremacy diagram, critical enablers that distinguish a sovereign AI power from its aspirants. Together, these frameworks underscore a central contention: AI sovereignty is not an isolated technical feat but a systemic geopolitical achievement, contingent upon the alignment of public authority and private innovation within a nation’s strategic ambit.

As illustrated by the diagram, the concept of “sovereign AI” extends far beyond the mere possession of models or GPUs. It calls for the establishment of a comprehensive, nationwide technological stack—comprising both sovereign technologies and enabling infrastructures—that collectively empower a nation to achieve full autonomy in the digital sphere as a whole. To aid in this understanding, the diagram features a progress bar that quantitatively measures the extent to which each layer of sovereignty is available, offering a clear gauge of a nation’s progress toward achieving full autonomy across these critical technological layers. The underlying premise is that true sovereignty in AI will ultimately require far more than what is demanded by a language model alone, as safeguarding the independence and breadth of AI-powered solutions necessitates a continuously expanding and evolving technological stack.

Machine learning, in its current form, is limited. What we must aim for is an AI that builds a true representation of the world, allowing it to learn, reason, and plan. — Yann Le Cun

To achieve this autonomy, any nation needs to get their tailored mass of contracts with providers, enable local investments to divide space with allied providers, establish access to critical minerals, equipments, innovation streams, fight currently established cartels and corruption schemes that can vendor-lock fast paced developments and open-data procedures, and surely, orchestrate these initiatives with defense, national security and the digital literacy of end-users, so the local markets can not only provide, but project sovereign values that can be aligned with others.

While not every nation will have the required capabilities to possess autonomy in all the sovereign domains required for a fully sovereign AI, likeminded blocs and allies can—and should—share. Eventually, as AI capabilities evolve, having their own marketplaces, from microelectronics to hyperscalers and reliable energetic matrices, should become easier to achieve, thanks to intelligent agents. But importantly, countries need to break down silos, balance serial and parallel initiatives and enable national capabilities that can quickly adapt and secure their own interests.

Same as back-office capabilities is where today’s AI arrives first, infrastructure is where true sovereignty arrives first. Transgovernamental priorities should become stable, with proper auditing, talent retention and procurement initiatives. And having the support of your own citizens is paramount: Trust that AI and other technological initiatives are necessary and reliable is what tell efforts apart, and steering away from a digital police state should be concern number one for nations that understand the challenge of the next decade.

Earlier this year, Emmanuel Macron said that “the future of AI is a political issue, one of sovereignty and strategic independence”. But nobody is discussing monopolies and cartels that may be forged, reinforced or merged in this process. The world of politics is, unfortunately, ridden with hidden motives, agendas and inneficiency, and the idea of national champions is ripe to become the mote for more corruption and more cartelism. We can automate these massive issues, or fix them. What will the world do?

Future Costs of Automated Corruption

AI sovereignty, if captured by corruption and cartelism, risks not just entrenching today’s inefficiencies but automating them into a future of profound dysfunction. Consider the trajectory: lawmakers and leaders, steeped in short-termism and influence peddling, may steer sovereign AI initiatives toward familiar beneficiaries—legacy contractors, state-backed firms and political cronies—securing contracts not through innovation but through the well-worn paths of lobbying and kickbacks. These behemoths, burdened by many “corrupt mouths to feed” and lacking the nimbleness of true innovators, will lean on vendor lock-in and bloated systems, slowing the pace of AI advancement while cementing their dominance. This is no mere inefficiency; it’s a moat around power, with AI as the drawbridge.

Fast-forward a decade: by 2035, AI’s sophistication will increasingly enable it to correlate vast datasets and expose cartel behaviors—price-fixing, bid-rigging, anomalous corporate data and nepotistic deals—rendering corruption transparent to any system worth its salt. Yet, if today’s initiatives encode corrupt schemes into AI’s architecture, the cost of this clarity will be steep. Path dependence ensures that unwinding such perversions—realigning AI from serving cartels to serving the public—will demand a dual expenditure: taxpayers will have funded the automation of graft once, only to pay again to dismantle it. Worse, an AI misaligned to connive with corruption poses existential risks—national security falters when rivals wield untainted systems, and international competition tilts toward those who avoided this trap. AI sovereignty, so vital now, could thus become a double-edged sword, either exposing and purging systemic rot or amplifying it into an automated dystopia. The choice hinges on governance today.

Moreover, what’s notably absent from prevailing AI sovereignty discussions is this honest reckoning with the institutional capture that plagues modern governance. The discourse often assumes benevolent technocratic control, the pursue of fair, inclusive, public interest AI, when reality presents an intricate web of backroom deals, regulatory arbitrage, designed blind spots, revolving doors, and influence markets. These aren’t mere aberrations but are deeply seen as features of the present system—a system now poised to architect AI’s foundational infrastructure, alignment and values. The stakes transcend mere inefficiency: corrupt AI sovereignty initiatives threaten to codify asymmetric information advantages, allowing those with privileged access to exploit predictive models while maintaining plausible deniability. This represents not just economic rent-seeking but a potential lock-in of power disparities that could persist for generations. While technical specifications dominate public discourse, the governance structures being quietly established today will determine whether sovereign AI serves as society’s immune system against corruption or becomes its most sophisticated enabler ever.

Before asking for more headcount and resources, teams must demonstrate why they cannot get what they want done using AI. — Shopify CEO Tobias Lutke in April 7.

Within a decade, we face a profound bifurcation as both organizations and individuals become increasingly “AI-native.” Much as the internet divided the world into digital natives and laggards, AI fluency will become the new determinant of adaptability and success. Thousands of companies in all sectors will not merely deploy AI but fundamentally reconstitute themselves around it—thinking, designing, and organizing through AI-mediated processes. Individual citizens, too, will develop cognitive partnerships with these systems, changing how humans interface with institutions and each other. If corruption becomes baked into this new substrate, we risk not just repeating history’s mistakes but algorithmically amplifying them—creating self-reinforcing feedback loops of institutional failure. Each interaction with compromised systems would further entrench corrupt norms, making them increasingly invisible yet more pervasive. The alternative—designing sovereignty frameworks with radical transparency and distributed oversight—offers not just efficiency but evolutionary potential: AI systems that continuously improve governance rather than calcify its failings. The question is not whether AI will transform our institutions, but whether we’ll let captured interests determine the direction of that transformation.